Welcome to the next entry in my series where I’ll be chronicling the journey of building a new data platform, week by week. This project is not just about the technical aspects of platform development but also about the business insights, stakeholder interactions, and the tools that make it all possible. I invite you to my world to come along for the ride.

I recently joined Gallo Mechanical as their VP of Analytics. Gallo is a national leader in the construction services industry and from day one of speaking to them, I knew this was a place I wanted to be. The success of Gallo is a testament to the strength and focus of its leadership and employees. Im excited to help bring a data and analytics mindset and apply it to the day to day and strategic operations of the business.

In case you missed it, here are the previous weeks:

Week 1 – Laying the foundation

Week 2 & 3 – Foundation & Planning

Week 4 – Data Modeling

Let’s dive into Week 5.

Where is my time going this week?

Modeling, modeling and more modeling

Structure

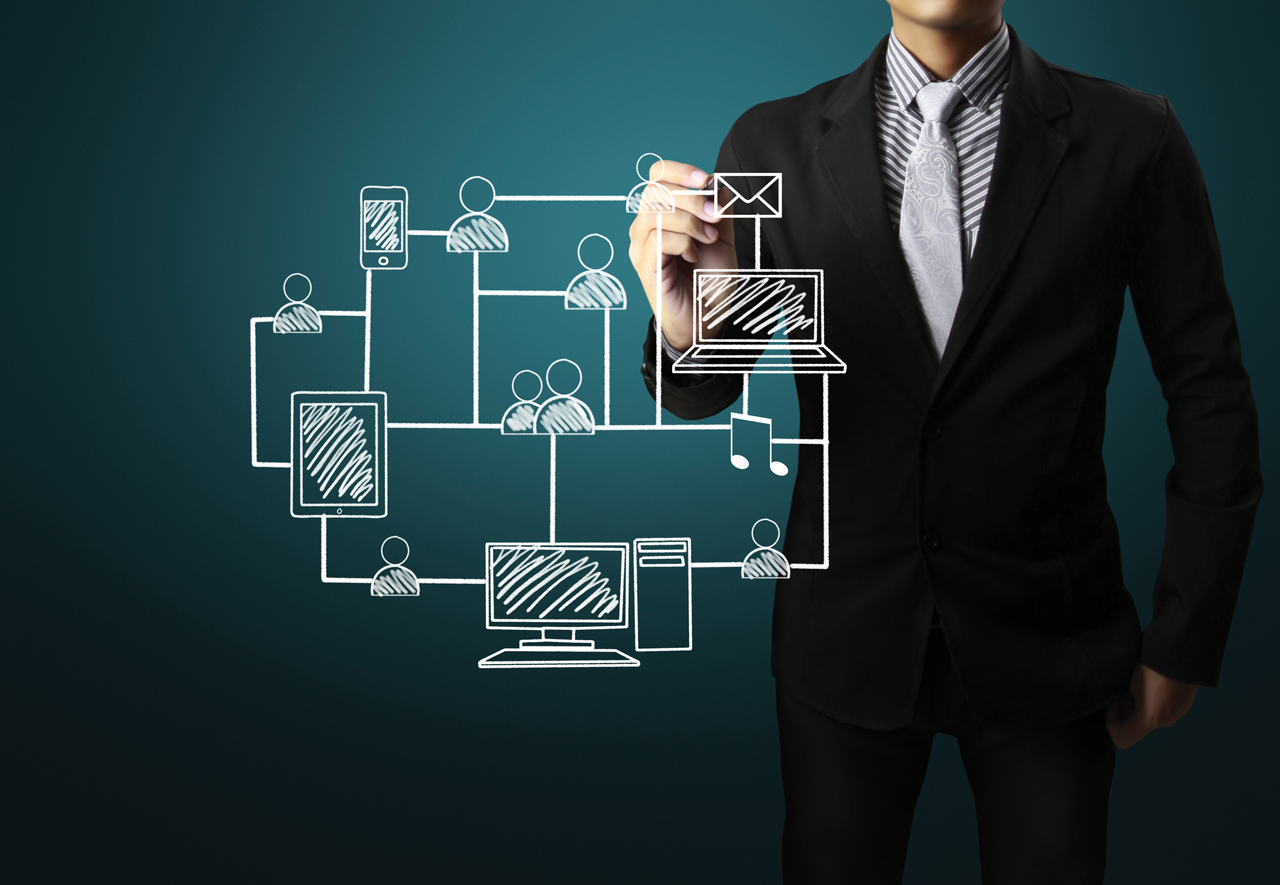

This week, I went heads down on modeling all the data I could. Im taking a systematic approach with how I am ingesting the APIs from VISTA. Working with them, we are turning on 1-2 APIs per week so I can ingest them via portable.io and then take that raw data, clean it up and build out the core entity tables. There are roughly 20 views via each API that is brought in. This week I did our service module (SM) and modeled all the work orders that were ingested.

Interesting to note, it is pretty common for operational data in an ERP or other operations platform to be transactional. Transactional but not logs of action. This means, every day, as actions occur, they update the row with the data. This can lose historical value since records are updated. For the tables we are bringing in, we are storing them with a process date and as the platform matures, we will be able to aggregate these as SCD (slowly changing dimension) tables. Not focused on this now, but it’s critical that as soon as you can, you capture what is happening.

Here is a basic architecture

Exploration via HEX reporting

I brought in a tool called HEX (thanks for turning me on to this Harrison Palmer). This tool allows us to quickly build one off reports using SQL and/or Python to create visualizations for ad-hoc requests. You should always have a tool that you can prototype reports quickly so that you don’t spend a ton of time building out more dashboards in your BI tool that people will look at once or twice.

Focus

The focus has been on taking the raw data and getting it into structured entities. In order to meet my goal of delivering our first automation and reports in January, the main tables need to be built out. I assume that the next 2-3 weeks will be much of the same in addition to negotiating our contracts for Snowflake and finding a BI tool (Thoughtspot vs Sigma).

Vendor and Software Selection Continues

Had a couple of meetings and demos of the products this week. Lot of thought going into our BI tool selection. We don’t have a tremendous amount of data and need a tool that can scale with our needs. Also want to make sure the implementation is as easy as possible (I am only 1 person right now).

Always consider professional services as part of any implementation, especially those that you have never used before. Also, it’s a less expensive way to scale yourself if you are a party of one.

Action Summary

- Ingested more data via portable to the raw warehouse

- Continued to design the core data structures that I have access to

- Reviewing BI and Automation platforms

Looking Ahead

With the holidays coming up, we will pick things up in January. Have a blessed holiday!

Week 1 – Laying the foundation

Week 2 & 3 – Foundation & Planning

Week 4 – Data Modeling

Join me next week as we delve deeper into the intricacies of platform development and witness how a concept gradually transforms into a tangible, functioning entity. Your thoughts and suggestions are always welcome, so feel free to email me your comments. john@slingspace.com